BIPS: Bi-modal Indoor Panorama Synthesis via Residual Depth-Aided Adversarial Learning

Oct 21, 2022·,,,,,·

0 min read

Changgyoon Oh*

Wonjune Cho*

Yujeong Chae*

Daehee Park*

Lin Wang

Kuk-Jin Yoon (* Equal Contribution)

Abstract

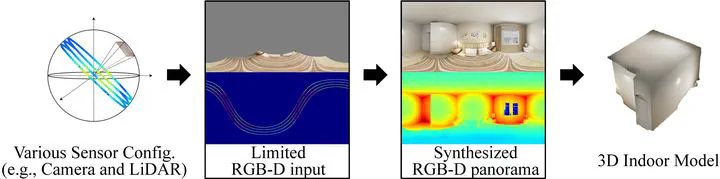

Providing omnidirectional depth along with RGB information is important for numerous applications. However, as omnidirectional RGB-D data is not always available, synthesizing RGB-D panorama data from limited information of a scene can be useful. Therefore, some prior works tried to synthesize RGB panorama images from perspective RGB images; however, they suffer from limited image quality and can not be directly extended for RGB-D panorama synthesis. In this paper, we study a new problem, RGB-D panorama synthesis under the various configurations of cameras and depth sensors. Accordingly, we propose a novel bi-modal (RGB-D) panorama synthesis (BIPS) framework. Especially, we focus on indoor environments where the RGB-D panorama can provide a complete 3D model for many applications. We design a generator that fuses the bi-modal information and train it via residual depth-aided adversarial learning (RDAL). RDAL allows to synthesize realistic indoor layout structures and interiors by jointly inferring RGB panorama, layout depth, and residual depth. In addition, as there is no tailored evaluation metric for RGB-D panorama synthesis, we propose a novel metric (FAED) to effectively evaluate its perceptual quality. Extensive experiments show that our method synthesizes high-quality indoor RGB-D panoramas and provides more realistic 3D indoor models than prior methods. Code is available at

.

Type

Publication

ECCV 2022